The Explainable AI Imperative: Why Black Box AI is a Risk Management Nightmare

Post Summary

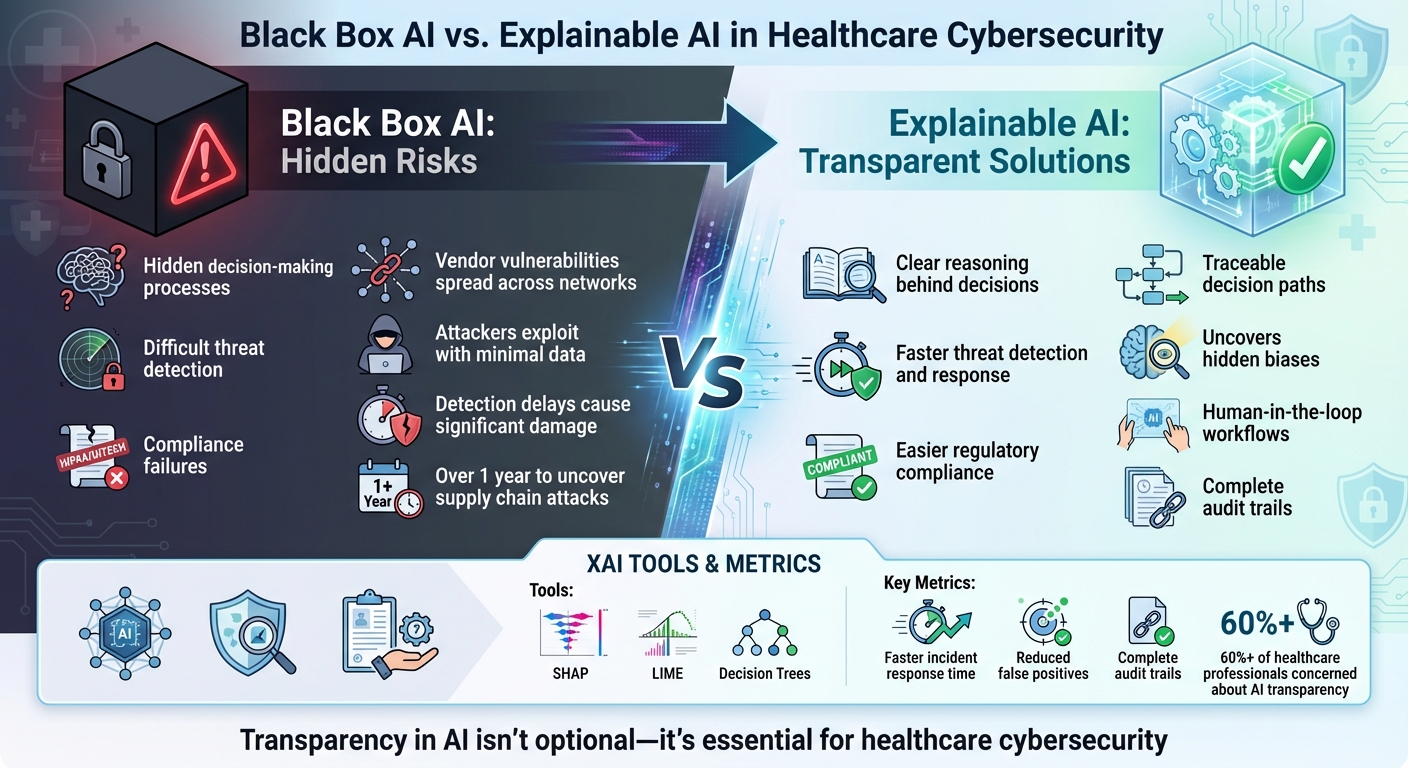

Its opacity hides model behavior, making it difficult to detect attacks, prove compliance, or validate decisions.

They make HIPAA audits difficult, obscure PHI access, and create legal gaps in accountability.

XAI reveals how decisions are made, detects hidden biases, and provides traceable reasoning for alerts and risk scores.

Tools like SHAP and LIME clarify vendor risk scores, security weaknesses, and data‑handling practices.

It automates assessments, routes findings to reviewers, summarizes evidence, and preserves human oversight.

It builds trust, strengthens governance, reduces bias, and ensures accountability in mission‑critical workflows.

Black box AI systems are a growing risk in healthcare cybersecurity. These opaque models make decisions without revealing how they arrived at them, leaving organizations vulnerable to security breaches, compliance failures, and operational risks. Attackers can exploit these blind spots, often with minimal effort, causing significant harm.

The solution? Explainable AI (XAI). Unlike black box systems, XAI provides transparency, making it easier to understand, validate, and trust AI-driven decisions. This clarity is crucial in healthcare, where patient safety and regulatory compliance are at stake.

Key Takeaways:

Bottom line: Transparency in AI isn't optional - it's a necessity for safeguarding healthcare systems and protecting patient data.

Black Box AI vs Explainable

Cybersecurity

The Risks of Black Box AI in Healthcare Cybersecurity

Black box AI systems pose serious challenges to protecting patient data and maintaining operational security in healthcare. These systems often lead to regulatory complications, security weaknesses, and vulnerabilities within vendor networks. Together, these risks can ripple through entire healthcare ecosystems, creating significant threats.

Compliance Failures and Regulatory Challenges

Healthcare organizations are required to safeguard Protected Health Information (PHI) under laws like HIPAA and HITECH. However, black box AI systems make this job much harder. When these systems flag potential threats or access sensitive data, auditors need to understand how decisions were made to ensure compliance. The problem? Black box AI lacks transparency, making it difficult - if not impossible - to verify whether PHI was mishandled or whether privacy rules were breached [6][7]. Without clear documentation of how decisions are reached, organizations struggle to prove compliance during audits or investigations. This opacity increases legal risks and can leave organizations exposed to penalties. On top of that, hidden vulnerabilities in these systems make them attractive targets for advanced cyberattacks, further complicating compliance efforts.

Hidden Cybersecurity Vulnerabilities

The lack of transparency in black box AI systems creates blind spots that weaken threat detection and response. Research shows that attackers can exploit these systems with minimal data, achieving high success rates [1][2]. Because these systems obscure how they process threat intelligence, organizations often fail to detect adversarial attacks, data breaches, or ransomware until significant damage has already occurred. This delayed awareness leaves healthcare networks highly vulnerable to sophisticated cyber threats.

Vendor and Supply Chain Risks

Third-party vendors supplying black box AI tools introduce another layer of risk. These tools often conceal critical details about how data is handled, what security measures are in place, and how models are updated. A single compromised vendor can spread vulnerabilities across multiple healthcare institutions simultaneously [1][2], creating large-scale systemic risks. The decentralized nature of healthcare systems, combined with the trust placed in vendors, makes supply chain attacks particularly hard to detect - sometimes taking over a year to uncover, if they are discovered at all [2]. With little insight into how vendor-supplied AI operates or updates, healthcare organizations are left to rely on vendor assurances without the ability to independently verify their claims. This lack of visibility not only complicates compliance but also leaves healthcare systems more exposed to operational disruptions and cyberattacks.

Explainable AI: A Path to Safer Cybersecurity Practices

The risks associated with black box AI have made the need for transparency in decision-making more urgent than ever. Enter Explainable AI (XAI) - a solution that prioritizes clarity and human oversight while aligning with regulatory and security requirements. Unlike opaque systems, XAI fosters trust by bridging the gap between complex AI models and human understanding. This approach not only addresses compliance and security challenges but also ensures that decision-making remains accessible and accountable. Let’s dive into what XAI is and why it plays a crucial role in cybersecurity.

What is Explainable AI (XAI)?

At its core, Explainable AI is all about clarity. It’s a framework designed to make AI decisions understandable to humans. While traditional black box systems keep their inner workings hidden, XAI provides transparency, enabling professionals like clinicians, administrators, and auditors to review and assess how decisions are made [10].

XAI encompasses two main types of models:

By opening the "black box", XAI ensures that AI-driven decisions are not just accurate but also explainable.

XAI Capabilities for Risk Management

XAI’s transparency makes it a powerful tool for managing risks. By providing clear explanations for flagged risks or vendor scores, it helps organizations take a more informed approach to identifying, assessing, and mitigating potential threats [8].

One of XAI’s standout features is its ability to uncover hidden biases in AI models. This is particularly important in sensitive areas like patient data security, where fairness and non-discrimination are critical. Additionally, XAI’s traceable decision paths allow security teams to quickly pinpoint and resolve errors, enhancing both model performance and safety. These benefits are essential for meeting stringent regulations like GDPR and HIPAA [10].

Governance Patterns for Explainable AI

To fully harness the potential of XAI, organizations need to implement strong governance practices. Human-in-the-loop workflows are a key part of this strategy, ensuring that humans remain in control of critical decisions. By reviewing and validating AI outputs, these workflows prevent over-reliance on automation and maintain accountability [8].

Another vital aspect is rigorous model validation. This process helps stakeholders understand and trust AI decisions, reinforcing fairness and transparency. Keeping detailed logs of AI decision-making further supports audit and regulatory compliance, creating a solid foundation for the safe and scalable use of AI. These measures not only protect sensitive data but also uphold operational security, making XAI a cornerstone of modern cybersecurity practices.

sbb-itb-535baee

Practical Applications of XAI in Healthcare Cybersecurity

Healthcare organizations are leveraging Explainable AI (XAI) to improve transparency, strengthen threat detection, and ensure compliance. Here's a closer look at how XAI is reshaping cybersecurity in the healthcare sector.

Using XAI for Vendor and Risk Assessments

Third-party vendors are a major cybersecurity challenge in healthcare. Whether it's medical device manufacturers or cloud service providers, each connection can introduce potential vulnerabilities. XAI is changing the game by making AI-driven security decisions more transparent and easier to audit.

A 2025 study published in Electronics demonstrated an XAI-powered intrusion detection system for the Internet of Medical Things (IoMT). This framework utilized techniques like K-Means, PCA, SHAP, PDP, and ALE to combine network and biosensor data, improving both threat detection and transparency [4].

Tools like SHAP and LIME play a key role by breaking down vendor risk scores into understandable factors - such as outdated encryption protocols or poor patch management. The process includes data collection, preprocessing, feature selection, model training, and explainability, turning complex outputs into actionable insights [4]. Analysts can pinpoint the specific factors influencing a risk score, making it easier to act quickly and document decisions for audits.

"AI algorithms used in cybersecurity to detect suspicious activities and potential threats must provide explanations for each alert. Only with explainable AI can security professionals understand - and trust - the reasoning behind the alerts and take appropriate actions." - Palo Alto Networks

Building on this transparency, platforms like Censinet RiskOps™ extend XAI's capabilities into automated risk management.

Censinet RiskOps™: Streamlining Risk Management with XAI

Censinet RiskOps™ takes XAI a step further by combining automation with human oversight to refine risk management processes. The platform employs human-in-the-loop automation to speed up risk assessments while maintaining transparency and control. For instance, Censinet AI™ enables vendors to complete security questionnaires in seconds, automatically summarizes evidence and documentation, and generates risk reports based on relevant data.

What makes this approach stand out is the balance between automation and human input. Risk teams stay in control by setting configurable rules and reviewing key findings. Tasks are routed to the appropriate stakeholders, such as members of the AI governance committee, for final approval. The platform’s intuitive AI risk dashboard aggregates real-time data, acting as a centralized hub for managing AI policies, risks, and tasks.

This setup functions like "air traffic control" for AI governance, ensuring that the right teams address the right issues at the right time. The result? A more efficient risk reduction process that doesn’t compromise on transparency or accountability.

Measuring XAI's Impact Through Metrics

To gauge the success of XAI in healthcare cybersecurity, organizations track specific performance metrics that highlight improvements in speed, accuracy, and trust. These metrics also help validate the benefits of transparent decision-making.

Conclusion: Building Trust Through Explainable AI

Relying on black box AI systems poses significant risks for healthcare organizations. When the decision-making process is hidden, it becomes nearly impossible to verify accuracy, defend against audits, or comply with regulatory standards. The lack of transparency in such systems undermines trust and makes error detection a daunting task.

Explainable AI (XAI) changes the game for risk management. By providing clear reasoning behind AI predictions, XAI enables teams to verify decisions and quickly address any errors [3]. This level of transparency not only supports compliance but also empowers experts to identify and fix issues before they escalate. Such clarity paves the way for integrating AI safely and effectively into cybersecurity operations.

Censinet RiskOps™ exemplifies how human-in-the-loop automation can speed up risk assessments without sacrificing transparency or control. By allowing risk teams to set rules and review findings, this approach ensures automation enhances rather than replaces critical decision-making.

Healthcare organizations that adopt XAI gain stronger incident response capabilities and more reliable audit trails, which are critical for a solid cybersecurity framework. These systems help reduce risks, meet compliance requirements, and build the trust needed for AI-driven risk management. Fully embracing explainability is not just a regulatory necessity - it’s the foundation for resilient, trustworthy AI systems in healthcare cybersecurity.

FAQs

Why is Explainable AI (XAI) safer than black box AI in healthcare cybersecurity?

Explainable AI (XAI) plays a crucial role in bolstering cybersecurity within the healthcare sector by providing clarity into how AI systems arrive at their decisions. This level of insight helps organizations detect and address potential risks, such as weaknesses in AI models, data tampering, or gaps in regulatory compliance.

When healthcare providers can understand the logic behind AI-generated outcomes, they are better equipped to ensure these systems meet regulatory requirements, minimize errors, and foster confidence in AI-based processes. By enabling teams to make well-informed and accountable decisions, XAI not only enhances security but also improves overall operational performance.

How does Explainable AI support HIPAA compliance in healthcare?

Explainable AI (XAI) plays a key role in helping healthcare organizations meet HIPAA requirements by providing clear insights into how data is processed and decisions are made. This level of transparency allows organizations to show compliance with privacy regulations, manage patient consent effectively, and simplify the often-complex audit process.

By making AI systems easier to understand, XAI also lowers the chances of data breaches or unauthorized disclosures. This not only protects sensitive patient information but also strengthens trust in healthcare cybersecurity efforts.

How do tools like SHAP and LIME make AI systems more transparent?

Tools such as SHAP and LIME play a crucial role in making AI more accessible by simplifying complex model predictions into easy-to-grasp explanations. They highlight how specific features or inputs influence a given decision, helping users better understand how AI systems arrive at their conclusions.

This kind of transparency is especially valuable in critical fields like healthcare and cybersecurity, where trust in AI systems is essential. These tools also empower users to make more informed decisions by identifying and addressing potential biases or mistakes within AI models.

Related Blog Posts

- Explainable AI in Healthcare Risk Prediction

- “From Black Box to Glass Box: Demystifying AI Governance in Clinical Settings”

- Digital Hippocratic Oath: Balancing Medical AI Innovation with Cyber Safety

- How Explainable AI Reduces Healthcare Cyber Risks

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"Why is Explainable AI (XAI) safer than black box AI in healthcare cybersecurity?","acceptedAnswer":{"@type":"Answer","text":"<p>Explainable AI (XAI) plays a crucial role in bolstering cybersecurity within the healthcare sector by providing <strong>clarity</strong> into how AI systems arrive at their decisions. This level of insight helps organizations detect and address potential risks, such as weaknesses in AI models, data tampering, or gaps in regulatory compliance.</p> <p>When healthcare providers can understand the logic behind AI-generated outcomes, they are better equipped to ensure these systems meet regulatory requirements, minimize errors, and foster <strong>confidence</strong> in AI-based processes. By enabling teams to make well-informed and accountable decisions, XAI not only enhances security but also improves overall operational performance.</p>"}},{"@type":"Question","name":"How does Explainable AI support HIPAA compliance in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>Explainable AI (XAI) plays a key role in helping healthcare organizations meet HIPAA requirements by providing clear insights into how data is processed and decisions are made. This level of transparency allows organizations to show compliance with privacy regulations, manage patient consent effectively, and simplify the often-complex audit process.</p> <p>By making AI systems easier to understand, XAI also lowers the chances of data breaches or unauthorized disclosures. This not only protects sensitive patient information but also strengthens trust in healthcare cybersecurity efforts.</p>"}},{"@type":"Question","name":"How do tools like SHAP and LIME make AI systems more transparent?","acceptedAnswer":{"@type":"Answer","text":"<p>Tools such as SHAP and LIME play a crucial role in making AI more accessible by simplifying complex model predictions into easy-to-grasp explanations. They highlight how specific features or inputs influence a given decision, helping users better understand how AI systems arrive at their conclusions.</p> <p>This kind of transparency is especially valuable in critical fields like healthcare and cybersecurity, where trust in AI systems is essential. These tools also empower users to make more informed decisions by identifying and addressing potential biases or mistakes within AI models.</p>"}}]}

Key Points:

What risks do black box AI systems create in healthcare cybersecurity?

- Opaque decision-making hides how models access or process sensitive PHI.

- Compliance failures due to unverifiable decisions or undocumented data pathways.

- Adversarial vulnerabilities where attackers exploit blind spots with minimal data.

- Delayed breach detection, increasing operational and patient-safety impact.

- Vendor opacity, where AI suppliers provide limited security or audit transparency.

How does Explainable AI (XAI) mitigate these risks?

- Shows how decisions were made, improving trust and auditability.

- Exposes hidden biases, preventing discriminatory or unsafe outputs.

- Provides traceable logic paths to validate system behavior.

- Supports human validation, enabling safer incident response.

- Aligns with regulatory expectations for transparency and accountability.

How does XAI improve vendor and supply-chain security?

- Breaks down vendor risk scores into understandable components.

- Identifies weaknesses like outdated encryption, misconfigurations, or insecure APIs.

- Improves audit readiness with explainable evidence trails.

- Strengthens assessments across cloud services, medical devices, and integrated systems.

- Supports rapid triage during security reviews and due diligence.

What governance patterns are required for XAI?

- Human‑in‑the‑loop review to prevent overreliance on automation.

- Rigorous model validation before deployment and throughout lifecycle changes.

- Comprehensive decision logs for compliance, auditing, and risk investigations.

- Defined accountability roles across IT, security, clinical, and compliance teams.

- Bias testing and fairness standards for evaluating model performance.

How does Censinet RiskOps™ use XAI to improve AI risk management?

- Automates risk assessments while summarizing evidence in human-readable formats.

- Routes findings directly to governance committees for approval.

- Centralizes AI risk dashboards showing real-time statuses, policies, and exposures.

- Supports explainable workflows that document reasoning and data provenance.

- Maintains human authority through configurable approval rules.

How is XAI applied in real-world healthcare cybersecurity?

- Improving intrusion detection with transparent alert explanations.

- Supporting vendor reviews through interpretable risk scoring.

- Enhancing audit readiness with explainable compliance documentation.

- Strengthening threat analysis via model‑explanation tools like SHAP/LIME.

- Reducing error rates by enabling security teams to validate AI outputs.